195

2

Foundations for

Decision Making

Coordinating Lead Authors:

Roger N. Jones (Australia), Anand Patwardhan (India)

Lead Authors:

Stewart J. Cohen (Canada), Suraje Dessai (UK/Portugal), Annamaria Lammel (France),

Robert J. Lempert (USA), M. Monirul Qader Mirza (Bangladesh/Canada), Hans von Storch

(Germany)

Contributing Authors:

Werner Krauss (Germany), Johanna Wolf (Germany/Canada), Celeste Young (Australia)

Review Editors:

Rosina Bierbaum (USA), Nicholas King (South Africa)

Volunteer Chapter Scientist:

Pankaj Kumar (India/Japan)

This chapter should be cited as:

Jones

, R.N., A. Patwardhan, S.J. Cohen, S. Dessai, A. Lammel, R.J. Lempert, M.M.Q. Mirza, and H. von Storch,

2014: Foundations for decision making. In: Climate Change 2014: Impacts, Adaptation, and Vulnerability.

Part A: Global and Sectoral Aspects. Contribution of Working Group II to the Fifth Assessment Report of the

Intergovernmental Panel on Climate Change [Field, C.B., V.R. Barros, D.J. Dokken, K.J. Mach,

M.D. Mastrandrea, T.E. Bilir, M. Chatterjee, K.L. Ebi, Y.O. Estrada, R.C. Genova, B. Girma, E.S. Kissel, A.N. Levy,

S. MacCracken, P.R. Mastrandrea, and L.L. White (eds.)]. Cambridge University Press, Cambridge, United

Kingdom and New York, NY, USA, pp. 195-228.

2

196

Executive Summary............................................................................................................................................................ 198

2.1. Introduction and Key Concepts .............................................................................................................................. 199

2.1.1. Decision-Making Approaches in this Report ..................................................................................................................................... 199

2.1.2. Iterative Risk Management ............................................................................................................................................................... 200

2.1.3. Decision Support ............................................................................................................................................................................... 202

2.2. Contexts for Decision Making ................................................................................................................................ 203

2.2.1. Social Context ................................................................................................................................................................................... 203

2.2.1.1.Cultural Values and Determinants ........................................................................................................................................ 203

2.2.1.2.Psychology ........................................................................................................................................................................... 204

2.2.1.3.Language and Meaning ....................................................................................................................................................... 204

2.2.1.4.Ethics ................................................................................................................................................................................... 205

2.2.2. Institutional Context ......................................................................................................................................................................... 206

2.2.2.1.Institutions ........................................................................................................................................................................... 206

2.2.2.2.Governance .......................................................................................................................................................................... 207

2.3. Methods, Tools, and Processes for Climate-related Decisions ................................................................................ 207

2.3.1. Treatment of Uncertainties ............................................................................................................................................................... 207

2.3.2. Scenarios .......................................................................................................................................................................................... 208

2.3.3. Evaluating Trade-offs and Multi-metric Valuation ............................................................................................................................. 208

2.3.4. Learning, Review, and Reframing ..................................................................................................................................................... 209

2.4. Support for Climate-related Decisions ................................................................................................................... 210

2.4.1. Climate Information and Services ..................................................................................................................................................... 210

Box 2-1. Managing Wicked Problems with Decision Support ..................................................................................................... 211

2.4.1.1.Climate Services: History and Concepts ................................................................................................................................ 211

2.4.1.2.Climate Services: Practices and Decision Support ................................................................................................................. 212

2.4.1.3.The Geo-political Dimension of Climate Services ................................................................................................................. 212

2.4.2. Assessing Impact, Adaptation, and Vulnerability on a Range of Scales ............................................................................................. 213

2.4.2.1.Assessing Impacts ................................................................................................................................................................ 213

2.4.2.2.Assessing Vulnerability, Risk, and Adaptive Capacity ........................................................................................................... 214

2.4.3. Climate-related Decisions in Practice ................................................................................................................................................ 214

2.5. Linking Adaptation with Mitigation and Sustainable Development ...................................................................... 216

2.5.1. Assessing Synergies and Trade-offs with Mitigation ......................................................................................................................... 216

2.5.2. Linkage with Sustainable Development: Resilience .......................................................................................................................... 216

2.5.3. Transformation: How Do We Make Decisions Involving Transformation? .......................................................................................... 217

References ......................................................................................................................................................................... 218

Table of Contents

197

Foundations for Decision Making Chapter 2

2

Frequently Asked Questions

2.1: What constitutes a good (climate) decision? .................................................................................................................................... 200

2.2: Which is the best method for climate change decision making/assessing adaptation? .................................................................... 210

2.3: Is climate change decision making different from other kinds of decision making? ......................................................................... 216

198

Chapter 2 Foundations for Decision Making

2

Executive Summary

Decision support for impacts, adaptation, and vulnerability is expanding from science-driven linear methods to a wide range of

methods drawing from many disciplines (robust evidence, high agreement). This chapter introduces new material from disciplines

including behavioral science, ethics, and cultural and organizational theory, thus providing a broader perspective on climate change decision

making. Previous assessment methods and policy advice have been framed by the assumption that better science will lead to better decisions.

Extensive evidence from the decision sciences shows that while good scientific and technical information is necessary, it is not sufficient, and

decisions require context-appropriate decision-support processes and tools (robust evidence, high agreement). There now exists a sufficiently

rich set of available methods, tools, and processes to support effective climate impact, adaptation, and vulnerability (CIAV) decisions in a wide

range of contexts (medium evidence, medium agreement), although they may not always be appropriately combined or readily accessible to

decision makers. {2.1.1, 2.1.2, 2.1.3, 2.3}

Risk management provides a useful framework for most climate change decision making. Iterative risk management is most

suitable in situations characterized by large uncertainties, long time frames, the potential for learning over time, and the influence

of both climate as well as other socioeconomic and biophysical changes (robust evidence, high agreement).

Complex decision-making

contexts will ideally apply a broad definition of risk, address and manage relevant perceived risks, and assess the risks of a broad range of

plausible future outcomes and alternative risk management actions (robust evidence, medium agreement). The resulting challenge is for people

and organizations to apply CIAV decision-making processes in ways that address their specific aims. {2.1.2, 2.2.1, 2.3, 2.4.3}

Decision support is situated at the intersection of data provision, expert knowledge, and human decision making at a range of

scales from the individual to the organization and institution. Decision support is defined as a set of processes intended to create the

conditions for the production of decision-relevant information and its appropriate use. Such support is most effective when it is context-sensitive,

taking account of the diversity of different types of decisions, decision processes, and constituencies (robust evidence, high agreement).

Boundary organizations, including climate services, play an important role in climate change knowledge transfer and communication, including

translation, engagement, and knowledge exchange (medium evidence, high agreement). {2.1.3, 2.2.1, 2.2, 2.3, 2.4.1, 2.4.2, 2.4.3}

Scenarios are a key tool for addressing uncertainty (robust evidence, high agreement). They can be divided into those that explore

how futures may unfold under various drivers (problem exploration) and those that test how various interventions may play out

(solution exploration).

Historically, most scenarios used for CIAV assessments have been of the former type, though the latter are becoming

more prevalent (medium evidence, high agreement). The new RCP scenario process can address both problem and solution framing in ways

that previous IPCC scenarios have not been able to (limited evidence, medium agreement). {2.2.1.3, 2.3.2}

CIAV decision making involves ethical judgments expressed at a range of institutional scales; the resulting ethical judgements

are a key part of risk governance (robust evidence, medium agreement). Recognition of local and indigenous knowledge and diverse

stakeholder interests, values, and expectations is fundamental to building trust within decision-making processes (robust evidence, high

agreement). {2.2.1.1, 2.2.1.2, 2.2.1.3, 2.2.1.4, 2.4, 2.4.1}

Climate services aim to make knowledge about climate accessible to a wide range of decision makers. In doing so they have to

consider information supply, competing sources of knowledge, and user demand. Knowledge transfer is a negotiated process that takes a

variety of cultural values, orientations, and alternative forms of knowledge into account (medium evidence, high agreement). {2.4.1, 2.4.2}

Climate change response can be linked with sustainable development through actions that enhance resilience, the capacity to

change in order to maintain the same identity while also maintaining the capacity to adapt, learn, and transform.

Mainstreamed

adaptation, disaster risk management, and new types of governance and institutional arrangements are being studied for their potential to

support the goal of enhanced resilience (medium evidence, high agreement). {2.5.2}

Transformational adaptation may be required if incremental adaptation proves insufficient (medium evidence, high agreement). This

process may require changes in existing social structures, institutions, and values, which can be facilitated by iterative risk management and triple-

loop learning that considers a situation and its drivers, along with the underlying frames and values that provide the situation context. {2.1.2, 2.5.3}

199

Foundations for Decision Making Chapter 2

2

2.1. Introduction and Key Concepts

This chapter addresses the foundations of decision making with respect

to climate impact, adaptation, and vulnerability (CIAV). The Fourth

A

ssessment Report (AR4) summarized methods for assessing CIAV

(Carter et al., 2007), which we build on by surveying the broader

literature relevant for decision making.

Decision making under climate change has largely been modeled on

the scientific understanding of the cause-and-effect process whereby

increasing greenhouse gas emissions cause climate change, resulting

in changing impacts and risks, potentially increasing vulnerability to

those risks. The resulting decision-making guidance on impacts and

adaptation follows a rational-linear process that identifies potential

risks and then evaluates management responses (e.g., Carter et al.,

1994; Feenstra et al., 1998; Parry and Carter, 1998; Fisher et al., 2007).

This process has been challenged on the grounds that it does not

adequately address the diverse contexts within which climate decisions

are being made, often neglects existing decision-making processes, and

overlooks many cultural and behavioral aspects of decision making

(Smit and Wandel, 2006; Sarewitz and Pielke, 2007; Dovers, 2009; Beck,

2010). While more recent guidance on CIAV decision making typically

accounts for sectoral, regional, and socioeconomic characteristics

(Section 21.3), the broader decision-making literature is still not fully

reflected in current methods. This is despite an increasing emphasis

on the roles of societal impacts and responses to climate change in

decision-making methodologies (high confidence) (Sections 1.1, 1.2,

21.2.1).

The main considerations that inform the decision-making contexts

addressed here are knowledge generation and exchange, who makes

and implements decisions, and the issues being addressed and how

these can be addressed. These decisions occur within a broader social

and cultural environment. Knowledge generation and exchange

includes knowledge generation, development, brokering, exchange, and

application to practice. Decision makers include policymakers, managers,

planners, and practitioners, and range from individuals to organizations

and institutions (Table 21-1). Relevant issues include all areas affected

directly and indirectly by climate impacts or by responses to those

impacts, covering diverse aspects of society and the environment.

These issues include consideration of values, purpose, goals, available

resources, the time over which actions are expected to remain effective,

and the extent to which the objectives being pursued are regarded as

appropriate. The purpose of the decision in question, for example,

assessment, strategic planning, or implementation, will also define the

framework and tools needed to enable the process. This chapter neither

provides any standard template or instructions for decision making, nor

does it endorse particular decisions over others.

The remainder of this chapter is organized as follows. Section 2.1.2

addresses risk management, which provides an overall framework

suitable for CIAV decision making; Section 2.1.3 introduces decision

support; Section 2.2 discusses contexts for decision making; Section 2.3

discusses methods, tools, and processes; Section 2.4 discusses support

for and application of decision making; and Section 2.5 describes some

of the broader contexts influencing CIAV decision making.

2.1.1. Decision-Making Approaches in this Report

The overarching theme of the chapter and the AR5 report is managing

current and future climate risks (Sections 1.2.4, 16.2, 19.1), principally

through adaptation (Chapters 14 to 17), but also through resilience and

sustainable development informed by an understanding of both impacts

and vulnerability (Section 19.2). The International Standard ISO:31000

defines risk as the effect of uncertainty on objectives (ISO, 2009) and

the Working Group II AR5 Glossary defines risk as The potential for

consequences where something of human value (including humans

themselves) is at stake and where the outcome is uncertain (Rosa, 2003).

However, the Glossary also refers to a more operational definition for

assessing climate-related hazards: risk is often represented as

probability of occurrence of hazardous events or trends multiplied by the

consequences if these events occur. Risk can also refer to an uncertain

opportunity or benefit (see Section 2.2.1.3). This chapter takes a broader

perspective than the latter by including risks associated with taking

action (e.g., will this adaptation strategy be successful?) and the

broader socially constructed risks that surround “climate change” (e.g.,

fatalism, hope, opportunity, and despair).

Because all decisions on CIAV are affected by uncertainty and focus on

valued objectives, all can be considered as decisions involving risk

(e.g., Giddens, 2009) (high confidence). AR4 endorsed iterative risk

management as a suitable decision support framework for CIAV

assessment because it offers formalized methods for addressing

uncertainty, involving stakeholder participation, identifying potential

policy responses, and evaluating those responses (Carter et al., 2007;

IPCC, 2007b; Yohe et al., 2007). The literature shows significant

advances on all these topics since AR4 (Section 1.1.4), greatly expanding

methodologies for assessing impacts, adaptation, and vulnerability in

a risk context (Agrawala and Fankhauser, 2008; Hinkel, 2011; Jones and

Preston, 2011; Preston et al., 2011).

Many different risk methodologies, such as financial, natural disaster,

infrastructure, environmental health, and human health, are relevant for

CIAV decision making (very high confidence). Each methodology utilizes

a variety of different tools and methods. For example, the standard CIAV

methodology follows a top-down cause and effect pathway as outlined

previously. Others follow a bottom-up pathway, starting with a set of

decision-making goals that may be unrelated to climate and consider

how climate may affect those goals (see also Sections 15.2.1, 15.3.1).

Some methodologies such as vulnerability, resilience, and livelihood

assessments are often considered as being different from traditional

risk assessment, but may be seen as dealing with particular stages

within a longer term iterative risk management process. For example,

developing resilience can be seen as managing a range of potential

risks that are largely unpredictable; and sustainable development aims

to develop a social-ecological system robust to climate risks.

A major aim of decision making is to make good or better decisions. Good

and better decisions with respect to climate adaptation are frequently

mentioned in the literature but no universal criterion exists for a good

decision, including a good climate-related decision (Moser and Ekstrom,

2010). This is reflected in the numerous framings linked to adaptation

decision making, each having its advantages and disadvantages

200

Chapter 2 Foundations for Decision Making

2

(Preston et al., 2013; see also Section 15.2.1). Extensive evidence from

the decision sciences shows that good scientific and technical information

alone is rarely sufficient to result in better decisions (Bell and Lederman,

2003; Jasanoff, 2010; Pidgeon and Fischhoff, 2011) (high confidence).

Aspects of decision making that distinguish climate change from most

other contexts are the long time scales involved, the pervasive impacts

and resulting risks, and the “deep” uncertainties attached to many of

those risks (Kandlikar et al., 2005; Ogden and Innes, 2009; Lempert and

McKay, 2011). These uncertainties include not only future climate but

also socioeconomic change and potential changes in norms and values

within and across generations.

2.1.2. Iterative Risk Management

Iterative risk management involves an ongoing process of assessment,

action, reassessment, and response (Kambhu et al., 2007; IRGC, 2010)

that will continue—in the case of many climate-related decisions—for

decades if not longer (National Research Council, 2011). This development

is consistent with an increasing focus on risk governance (Power, 2007;

Renn, 2008), the integration of climate risks with other areas of risk

management (Hellmuth et al., 2011; Measham et al., 2011), and a wide

range of approaches for structured decision making involving process

uncertainty (Ohlson et al., 2005; Wilson and McDaniels, 2007; Ogden

and Innes, 2009; Martin et al., 2011).

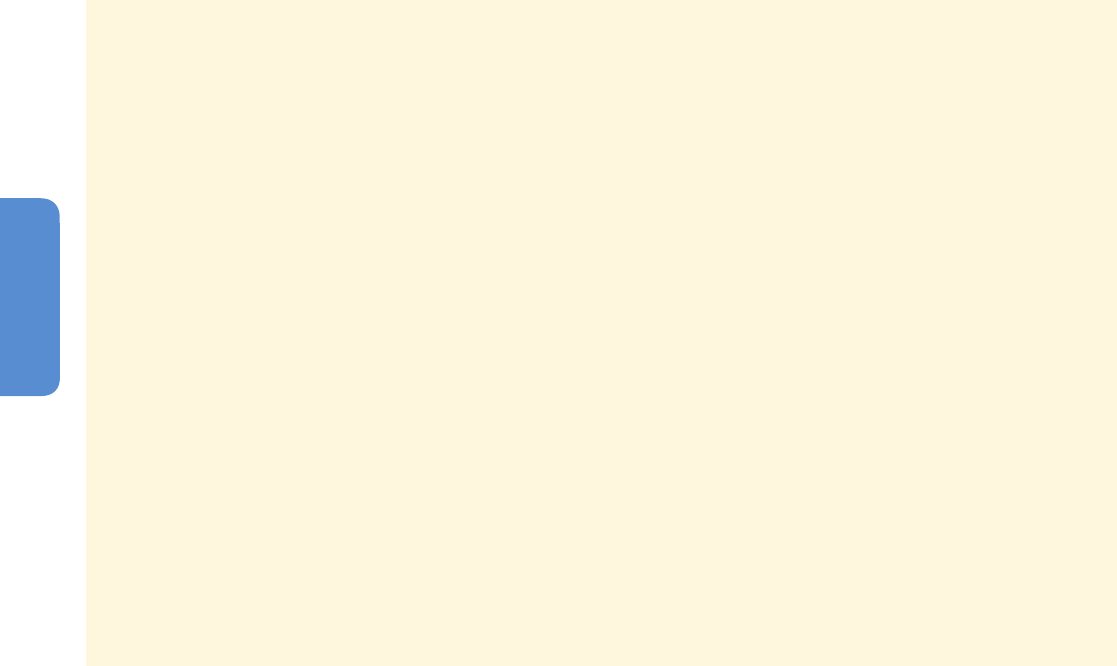

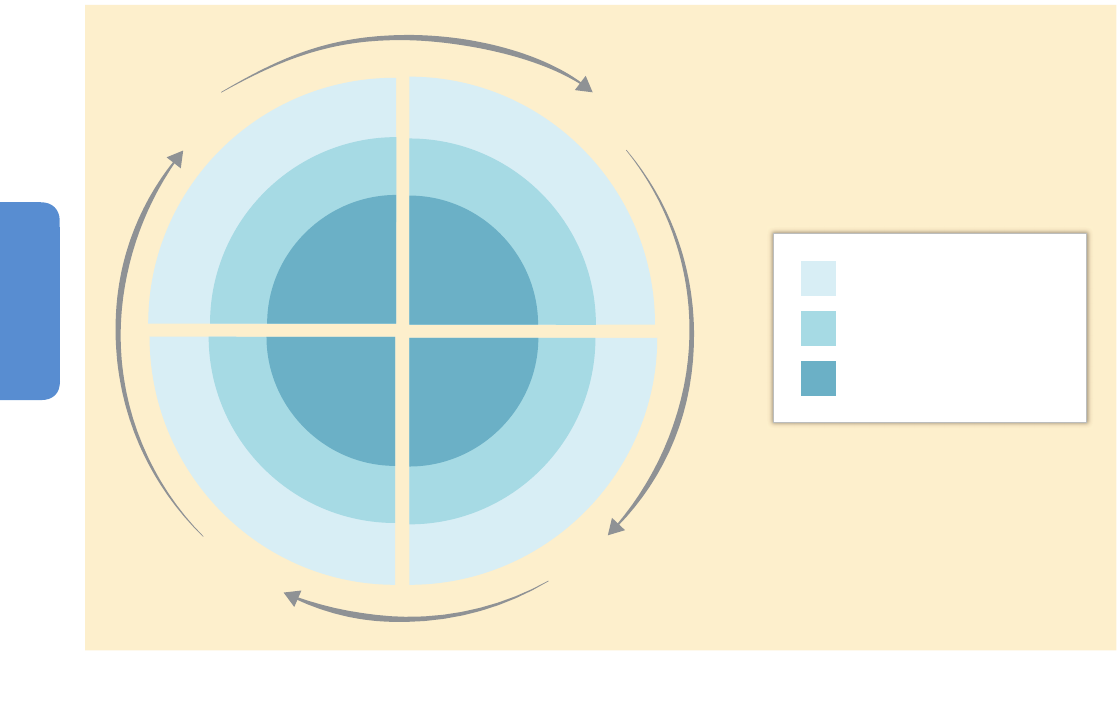

Two levels of interaction can be recognized within the iterative risk

management process: one internal and one external (Figure 2-1).

External factors are present through the entire process and shape the

process outcomes. The internal aspects describe the adaptation process

itself. The first major internal iteration (in yellow) reflects the interplay

with the analysis phase by addressing the interactions between evolving

risks and their feedbacks (not shown) and during the development and

choice of options. This process may also require a revision of criteria

and objectives. This phase ends with decisions on the favored options

being made. A further internal iteration covers the implementation of

actions and their monitoring and review (in orange). Throughout all

stages the process is reflexive, in order to enable changes in knowledge,

risks, or circumstances to be identified and responded to. At the end of

the implementation stage, all stages are evaluated and the process starts

again with the scoping phase. Iterations can be successive, on a set

timetable, triggered by specific criteria or informally by new information

informing risk or a change in the policy environment. An important

aspect of this process is to recognize emergent risks and respond to

them (Sections 19.2.3, 19.2.4, 19.2.5, 19.3).

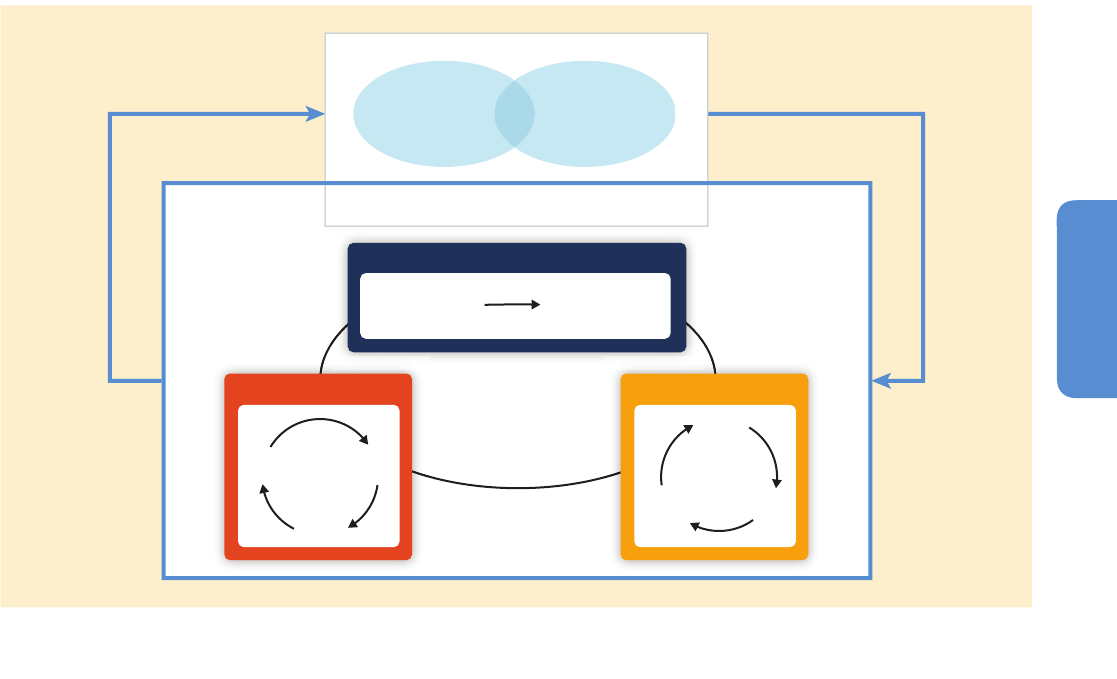

Complexity is an important attribute for framing and implementing

decision-making processes (very high confidence). Simple, well-bounded

contexts involving cause and effect can be addressed by straightforward

linear methods. Complicated contexts require greater attention to process

but can generally be unravelled, providing an ultimate solution (Figure

2-2). However, when complex environments interact with conflicting

values they become associated with wicked problems. Wicked problems

are not well bounded, are framed differently by various groups and

individuals, harbor large scientific to existential uncertainties and have

unclear solutions and pathways to those solutions (Rittel and Webber,

Frequently Asked Questions

FAQ 2.1 | What constitutes a good (climate) decision?

No universal criterion exists for a good decision, including a good climate-related decision. Seemingly reasonable

decisions can turn out badly, and seemingly unreasonable decisions can turn out well. However, findings from

decision theory, risk governance, ethical reasoning, and related fields offer general principles that can help improve

the quality of decisions made.

Good decisions tend to emerge from processes in which people are explicit about their goals; consider a range of

alternative options for pursuing their goals; use the best available science to understand the potential consequences

of their actions; carefully consider the trade-offs; contemplate the decision from a wide range of views and

vantages, including those who are not represented but may be affected; and follow agreed-upon rules and norms

that enhance the legitimacy of the process for all those concerned. A good decision will be implementable within

constraints such as current systems and processes, resources, knowledge, and institutional frameworks. It will have

a given lifetime over which it is expected to be effective, and a process to track its effectiveness. It will have defined

and measurable criteria for success, in that monitoring and review is able to judge whether measures of success

are being met, or whether those measures, or the decision itself, need to be revisited.

A good climate decision requires information on climate, its impacts, potential risks, and vulnerability to be integrated

into an existing or proposed decision-making context. This may require a dialog between users and specialists to

jointly ascertain how a specific task can best be undertaken within a given context with the current state of scientific

knowledge. This dialog may be facilitated by individuals, often known as knowledge brokers or extension agents,

and boundary organizations, who bridge the gap between research and practice. Climate services are boundary

organizations that provide and facilitate knowledge about climate, climate change, and climate impacts for planning,

decision making, and general societal understanding of the climate system.

201

Foundations for Decision Making Chapter 2

2

1973; Australian Public Service Commission, 2007). Such “deep

uncertainty” cannot easily be quantified (Dupuy and Grinbaum, 2005;

Kandlikar et al., 2005). Another important attribute of complex

systems is reflexivity, where cause and effect feed back into each

other (see Glossary). For example, actions taken to manage a risk will

affect the outcomes, requiring iterative processes of decision making

(very high confidence). Under climate change, calculated risks will

also change with time as new knowledge becomes available (Ranger

et al., 2010).

In complex situations, sociocultural and cognitive-behavioral contexts

become central to decision making. This requires combining the scientific

understanding of risk with how risks are framed and perceived by

individuals, organizations, and institutions (Hansson, 2010). For that

reason, formal risk assessment is moving from a largely technocratic

exercise carried out by experts to a more participatory process of decision

support (Fiorino, 1990; Pereira and Quintana, 2002; Renn, 2008),

although this process is proceeding slowly (Christoplos et al., 2001;

Pereira and Quintana, 2002; Bradbury, 2006; Mercer et al., 2008).

Different traditional and modern epistemologies, or “ways of knowing”

exist for risk (Hansson, 2004; Althaus, 2005; Hansson, 2010),

vulnerability (Weichselgartner, 2001; O’Brien et al., 2007), and

adaptation assessments (Adger et al., 2009), affecting the way they are

framed by various disciplines and are also understood by the public

(Garvin, 2001; Adger, 2006; Burch and Robinson, 2007). These differences

have been identified as a source of widespread misunderstanding and

disagreement. They are also used to warn against a uniform epistemic

approach (Hulme, 2009; Beck, 2010), a critique that has been leveled

against previous IPCC assessments (e.g., Hulme and Mahony, 2010).

The following three types of risk have been identified as important

epistemological constructs (Thompson, 1986; Althaus, 2005; Jones,

2012):

1. Idealized risk: the conceptual framing of the problem at hand. For

example, dangerous anthropogenic interference with the climate

system is how climate change risk is idealized within the UNFCCC.

2. Calculated risk: the product of a model based on a mixture of

historical (observed) and theoretical information. Frequentist or

recurrent risks often utilize historical information whereas single-

event risks may be unprecedented, requiring a more theoretical

approach.

3. Perceived risk: the subjective judgment people make about an

idealized risk (see also Section 19.6.1.4).

These different types show risk to be partly an objective threat of harm

and partly a product of social and cultural experience (Kasperson et al.,

1988; Kasperson, 1992; Rosa, 2008). The aim of calculating risk is to be

as objective as possible, but the subjective nature of idealized and

perceived risk reflects the division between positivist (imposed norms)

and constructivist (derived norms) approaches to risk from the natural

and social sciences respectively (Demeritt, 2001; Hansson, 2010).

Idealized risk is important for framing and conceptualizing risk and will

often have formal and informal status in the assessment process,

contributing to both calculated and perceived risk. These types of risk

combine at the societal scale as socially constructed risk, described and

Identify risks,

vulnerabilities,

and objectives

Establish decision

making criteria

Scoping

Implementation

Review

& learn

Implement

decision

Monitor

Assess

risks

Identify

options

Evaluate

tradeoffs

Analysis

Knowledge

Context

Deliberative Process

People

Figure 2-1 | Iterative risk management framework depicting the assessment process, and indicating multiple feedbacks within the system and extending to the overall context

(adapted from Willows and Connell, 2003).

202

Chapter 2 Foundations for Decision Making

2

assessed in a wide range of research literature such as psychology,

anthropology, geography, ethics, sociology, and political science (see

Sections 2.2.1.2, 19.6.1.4).

Acceptance of the science behind controversial risks is strongly influenced

by social and cultural values and beliefs (Leiserowitz, 2006; Kahan et

al., 2007; Brewer and Pease, 2008). Risk perceptions can be amplified

socially where events pertaining to hazards interact with psychological,

social, institutional, and cultural processes in ways that heighten or

attenuate individual and social perceptions of risk and shape risk

behavior (Kasperson et al., 1988; Renn et al., 1992; Pidgeon et al.,

2003; Rosa, 2003; Renn, 2011). The media have an important role in

propagating both calculated and perceived risk (Llasat et al., 2009),

sometimes to detrimental effect (Boykoff and Boykoff, 2007; Oreskes

and Conway, 2010; Woods et al., 2012).

Understanding how these perceptions resonate at an individual and

collective level can help overcome constraints to action (Renn, 2011).

Science is most suited to calculating risk in areas where it has predictive

skill and will provide better estimates than may be obtained through

more informal methods (Beck, 2000), but an assessment of what is at

risk generally needs to be accepted by stakeholders (Eiser et al., 2012).

Therefore, the science always sits within a broader social setting

(Jasanoff, 1996; Demeritt, 2001; Wynne, 2002; Demeritt, 2006), often

requiring a systems approach where science and policy are investigated

in tandem, rather than separately (Pahl-Wostl, 2007; Ison, 2010) (very

high confidence). These different types of risk give rise to complex

interactions between formal and informal knowledge that cannot be

bridged by better science or better predictions but require socially and

culturally mediated processes of engagement (high confidence).

2.1.3. Decision Support

The concept of decision support provides a useful framework for

understanding how risk-based concepts and information can help

enhance decision making (McNie, 2007; National Research Council Panel

on Design Issues for the NOAA Sectoral Applications Research Program

et al., 2007; Moser, 2009; Romsdahl and Pyke, 2009; Kandlikar et al.,

2011; Pidgeon and Fischhoff, 2011) The concept also helps situate

methods, tools, and processes intended to improve decision making

within appropriate institutional and cultural contexts.

Decision support is defined as “a set of processes intended to create

the conditions for the production of decision-relevant information and

for its appropriate use” (National Research Council, 2009a, p. 33).

Information is decision-relevant if it yields deeper understanding of, or

is incorporated into making a choice that improves outcomes for decision

makers and stakeholder or precipitates action to manage known risks.

Effective decision support provides users with information they find useful

because they consider it credible, legitimate, actionable, and salient

(e.g., Jones et al., 1999; Cash et al., 2003; Mitchell, 2006; Reid et al.,

2007). Such criteria can be used to evaluate decision support and such

evaluations lead to common principles of effective decision support,

which have been summarized in National Research Council (2009b) as:

• Begins with user’s needs, not scientific research priorities. Users

may not always know their needs in advance, so user needs are

often developed collaboratively and iteratively among users and

researchers.

• Emphasizes processes over products. Though the information

products are important, they are likely to be ineffective if they are

not developed to support well-considered processes.

Methodology

Approach

Stakeholder strategy

Mental models

Values and outcomes

Monitoring

Linear, cause and effect

Analytic and technical

Communication

Common model

Widely accepted

Straightforward

Top down and/or bottom up,

iterative

Collaborative process with

technical input

Collaboration

Negotiated and shared

Negotiated over project by user

perspectives and calculated risk

With review and trigger points

Iterative and/or adaptive, ongoing and systemic

Process driven. Frame and model multiple

drivers and valued outcomes

Deliberation, creating shared understanding

and ownership

Contested initially and negotiated over project

Contested initially and negotiated over project

As real-time as possible, adaptive with

management feedback and trigger points

Calculated

risk

Perceived

risk

Simple risk Complicated risk Complex risk

Characteristics of decision making

Circle size increases with uncertainty

Figure 2-2 | Hierarchy of simple, complicated, and complex risks, showing how perceived risks multiply and become less connected with calculated risk with increasing

complexity. Also shown are major characteristics of assessment methods for each level of complexity.

203

Foundations for Decision Making Chapter 2

2

•

Incorporates systems that link users and producers of information.

These systems generally respect the differing cultures of decision

makers and scientists, but provide processes and institutions that

effectively link individuals from these differing communities.

• Builds connections across disciplines and organizations, in order to

provide for the multidisciplinary character of the needed information

and the differing communities and organizations in which this

information resides.

• Seeks institutional stability, either through stable institutions and/or

networks, which facilitates building the trust and familiarity needed

for effective links and connections among information users and

producers in many different organizations and communities.

• Incorporates learning, so that all parties recognize the need for and

contribute to the implementation of decision support activities

structured for flexibility, adaptability, and learning from experience.

These principles can lead to different decision support processes

depending on the stage and context of the decision in question. For

instance, decision support for a large water management agency

operating an integrated system serving millions of people will have

different needs than a small town seeking to manage its groundwater

supplies. A community in the early stages of developing a response to

climate change may be more focused on raising awareness of the issue

among its constituents, while a community with a well-developed

understanding of its risks may be more focused on assessing trade-offs

and allocating resources.

2.2. Contexts for Decision Making

This section surveys aspects of decision making that relate to context

setting. Social context addresses cultural values, psychology, language,

and ethics (Section 2.2.1) and institutional context covers institutions

and governance (Section 2.2.2).

2.2.1. Social Context

Decision support for CIAV must recognize that diverse values, language

uses, ethics, and human psychological dimensions play a crucial role in

the way that people use and process information and take decisions

(Kahan and Braman, 2006; Leiserowitz, 2006). As illustrated in Figure

2-1, the context defines and frames the space in which decision-making

processes operate.

2.2.1.1. Cultural Values and Determinants

Cultural differences allocate values and guide socially mediated change.

Five value dimensions that show significant cross-national variations

are: power distance, individualism/collectivism, uncertainty avoidance,

long-/short-term orientation, and masculinity/femininity (Hofstede, 1980,

2001; Hofstede et al., 2010). Power distance and individualism/

collectivism both show a link to climate via latitude; the former relates

to willingness to conform to top-down directives, whereas the latter

relates to the potential efficacy of market-/community-based strategies.

Uncertainty avoidance and long-term orientation show considerable

v

ariation between countries (Hofstede et al., 2010), potentially producing

significant differences in risk perception and agency.

Environmental values have also been linked to cultural orientation.

Schultz et al. (2004) identified the association between self and nature in

people as being implicit—informing actions without specific awareness.

A strong association was linked to a more connected self and a weaker

association with a more egoistic self. Explicit environmental values can

substantially influence climate change-related decision-making processes

(Nilsson et al., 2004; Milfont and Gouveia, 2006; Soyez et al., 2009) and

public behavior toward policies (Stern and Dietz, 1994; Xiao and

Dunlap, 2007). Schaffrin (2011) concludes that geographical aspects,

vulnerability, and potential policy benefits associated with a given issue

can influence individual perceptions and willingness to act (De Groot

and Steg, 2007, 2008; Shwom et al., 2008; Milfont et al., 2010). Cultural

values can interrelate with specific physical situations of climate change

(Corraliza and Berenguer, 2000), or seasonal and meteorological factors

influencing people’s implicit connections with nature (Duffy and Verges,

2010). Religious and sacred values are also important (Goloubinoff,

1997; Katz et al., 2002; Lammel et al., 2008), informing the perception

of climate change and risk, as well as the actions to adapt (Crate and

Nuttal, 2009; see also Section 16.3.1.3). The role of protected values

(values that people will not trade off, or negotiate) can also be culturally

and spiritually significant (Baron and Spranca, 1997; Baron et al., 2009;

Hagerman et al., 2010). Adger et al. (2013) emphasize the importance

of cultural values in assessing risks and adaptation options, suggesting

they are at least as important as economic values in many cases, if not

more so. These aspects are important for framing and conceptualizing

CIAV decision making. Cultural and social barriers are described in

Section 16.3.2.7.

Two distinct ways of thinking—holistic and analytical thinking—reflect

the relationship between humans and nature and are cross-culturally

and even intra-culturally diverse (Gagnon Thompson and Barton, 1994;

Huber and Pedersen, 1997; Atran et al., 2005; Ignatow, 2006; Descola,

2010; Ingold, 2011). Holistic thinking is primarily gained through

experience and is dialectical, accepting contradictions and integrating

multiple perspectives. Characteristic of collectivist societies, the holistic

conceptual model considers that social obligations are reciprocal and

individuals take an active part in the community for the benefit of all

(Peng and Nisbett, 1999; Nisbett et al., 2001; Lammel and Kozakai,

2005; Nisbett and Miyamoto, 2005). Analytical thinking isolates the

object from its broader context, understanding its characteristics

through categorization, and predicting events based on intrinsic rules.

In the analytic conceptual model, individual interests take precedence

over the collective; the self is independent and communication comes

from separate fields. These differences influence the understanding of

complex systemic phenomena such as climate change (Lammel et al.,

2011, 2012, 2013) and decision-making practices (Badke-Schaub and

Strohschneider, 1998; Strohschneider and Güss, 1999; Güss et al., 2010).

The above models vary greatly across the cultural landscape, but neither

model alone is sufficient for decision making in complex situations (high

confidence). At a very basic level, egalitarian societies may respond

more to community based adaptation in contrast to more individualistic

societies that respond to market-based forces (medium confidence). In

small-scale societies, knowledge about climate risks are often integrated

204

Chapter 2 Foundations for Decision Making

2

i

nto a holistic view of community and environment (e.g., Katz et al.,

2002; Strauss and Orlove, 2003; Lammel et al., 2008). Many studies

highlight the importance of integrating local, traditional knowledge with

scientific knowledge when assessing CIAV (Magistro and Roncoli, 2001;

Krupnik and Jolly, 2002; Vedwan, 2006; Nyong et al., 2007; Dube and

Sekhwela, 2008; Crate and Nuttal, 2009; Mercer et al., 2009; Roncoli et

al., 2009; Green and Raygorodetsky, 2010; Orlove et al., 2010; Crate,

2011; Nakashima et al., 2012; see also Sections 12.3, 12.3.1, 12.3.2,

12.3.3, 12.3.4, 14.4.5, 14.4.7, 15.3.2.7, 25.8.2, 28.2.6.1, 28.4.1). For

example, a case study in Labrador (Canada) demonstrated the need to

account for local material and symbolic values because they shape the

relationship to the land, underlie the way of life, influence the intangible

effects of climate change, and can lead to diverging views on

adaptations (Wolf et al., 2012). In Kiribati, the integration of local cultural

values attached to resources/assets is fundamental to adaptation

planning and water management; otherwise technology will not be

properly utilized (Kuruppu, 2009).

2.2.1.2. Psychology

Psychology plays a significant role in climate change decision making

(Gifford, 2008; Swim et al., 2010; Anderson, 2011). Important

psychological factors for decision making include perception,

representation, knowledge acquisition, memory, behavior, emotions,

and understanding of risk (Böhm and Pfister, 2000; Leiserowitz, 2006;

Lorenzoni et al., 2006; Oskamp and Schultz, 2006; Sterman and

Sweeney, 2007; Gifford, 2008; Kazdin, 2009; Sundblad et al., 2009;

Reser et al., 2011; Swim et al., 2011).

Psychological research contributes to understanding on both risk

perception and the process of adaptation. Several theories, such as

multi-attribute utility theory (Keeney, 1992), prospect theory (Kahneman

and Tversky, 1979; Hardman, 2009), and cumulative prospect theory

relate to decision making under uncertainty (Tversky and Kahneman,

1992), especially to risk perception and agency. Adaptation in complex

situations pits an unsure gain against an unsure loss, so creates an

asymmetry in preference that magnifies with time as gains/losses are

expected to accrue in future. Decisions focusing on values and

uncertainty are therefore subject to framing effects. Recent cognitive

approaches include the one-reason decision process that uses limited

data in a limited time period (Gigerenzer and Goldstein, 1996) or decision

by sampling theory that samples real-world data to account for the

cognitive biases observed in behavioral economics (Stewart et al., 2006;

Stewart and Simpson, 2008). Risk perception is further discussed in

Section 19.6.1.4.

Responses to new information can modify previous decisions, even

producing contradictory results (Grothmann and Patt, 2005; Marx et al.,

2007). Although knowledge about climate change is necessary (Milfont,

2012), understanding such knowledge can be difficult (Rajeev Gowda

et al., 1997; Boyes et al., 1999; Andersson and Wallin, 2000). Cognitive

obstacles in processing climate change information include psychological

distances with four theorized dimensions: temporal, geographical, social

distance, and uncertainty (Spence et al., 2012; see also Section 25.4.3).

Emotional factors also play an important role in climate change

perception, attitudes, decision making, and actions (Meijnders et al.,

2

002; Leiserowitz, 2006; Klöckner and Blöbaum, 2010; Fischer and Glenk

2011; Roeser, 2012) and even shape organizational decision making

(Wright and Nyberg, 2012). Other studies on attitudes and behaviors

relevant to climate change decision making, include place attachment

(Scannell and Gifford, 2013; see also Section 25.4.3), political affiliation

(Davidson and Haan, 2011), and perceived costs and benefits (Tobler et

al., 2012). Time is a critical component of action-based decision making

(Steel and König, 2006). As the benefits of many climate change actions

span multiple temporal scales, this can create a barrier to effective

motivation for decisions through a perceived lack of value associated

with long-term outcomes.

Protection Motivation Theory (Rogers, 1975; Maddux and Rogers, 1983),

which proposes that a higher personal perceived risk will lead to a

higher motivation to adapt, can be applied to climate change-related

problems (e.g., Grothmann and Reusswig, 2006; Cismaru et al., 2011).

The person-relative-to-event approach predicts human coping strategies

as a function of the magnitude of environmental threat (Mulilis and

Duval, 1995; Duval and Mulilis, 1999; Grothmann et al., 2013). People’s

responses to environmental hazards and disasters are represented in

the multistage Protective Action Decision Model (Lindell and Perry,

2012). This model helps decision makers to respond to long-term threat

and apply it in long-term risk management. Grothmann and Patt (2005)

developed and tested a socio-cognitive model of proactive private

adaptation to climate change showing that perceptions of adaptive

capacities were important as well as perceptions of risk. If a perceived

high risk is combined with a perceived low adaptive capacity (see

Section 2.4.2.2; Glossary), the response is fatalism, denial, and wishful

thinking.

Best-practice methods for incorporating and communicating information

about risk and uncertainties into decisions about climate change

(Climate Change Science Program, 2009; Pidgeon and Fischhoff, 2011)

suggests that effective communication of uncertainty requires products

and processes that (1) closes psychological distance, explaining why

this information is important to the recipient; (2) distinguishes between

and explains different types of uncertainty; (3) establishes self-agency,

explaining what the recipient can do with the information and ways to

make decisions under uncertainty (e.g., precautionary principle, iterative

risk-management); (4) recognizes that each person’s view of risks and

opportunities depends on their values; (5) recognizes that emotion is a

critical part of judgment; and (6) provides mental models that help

recipients to understand the connection between cause and effect.

Information providers also need to test their messages, as they may not

be communicating what they think they are.

2.2.1.3. Language and Meaning

Aspects of decision making concerned with language and meaning

include framing, communication, learning, knowledge exchange, dialog,

and discussion. Most IPCC-related literature on language and

communication deals with definitions, predictability, and incomplete

knowledge, with less emphasis given to other aspects of decision

support such as learning, ambiguity, contestedness, and complexity.

Three important areas assessed here are definitions, risk language and

communication, and narratives.

205

Foundations for Decision Making Chapter 2

2

D

ecision-making processes need to accommodate both specialist and

non-specialist meanings of the concepts they apply. Various disciplines

often have different definitions for the same terms or use different terms

for the same action or object, which is a major barrier for communication

and decision making (Adger, 2003; see also Chapter 21). For example,

adaptation is defined differently with respect to biological evolution,

climate change, and social adaptation. Budescu et al. (2012) found that

people prefer imprecise wording but precise numbers when appropriate.

Personal lexicons vary widely, leading to differing interpretations of

uncertainty terms (Morgan et al., 1990); in the IPCC’s case leading to

uncertainty ranges often being interpreted differently than intended

(Patt and Schrag, 2003; Patt and Dessai, 2005; Budescu et al., 2012).

Addressing both technical and everyday meanings of key terms can help

bridge the analytic and emotive aspects of cognition. For example,

words like danger, disaster, uncertainty, and catastrophe have technical

and emotive aspects (Britton, 1986; Carvalho and Burgess, 2005). Terms

where this issue is especially pertinent include adaptation, vulnerability,

risk, dangerous, catastrophe, resilience, and disaster. Other words have

definitional issues because they contain different epistemological frames;

sustainability and risk are key examples (Harding, 2006; Hamilton et al.,

2007). Many authors advocate that narrow definitions focused solely

on climate need to be expanded to suit the context in which they are

being used (Huq and Reid, 2004; O’Brien et al., 2007; Schipper, 2007).

This is a key role for risk communication, ensuring that different types

of knowledge are integrated within decision context and outlining the

different values—implicit and explicit—involved in the decision process

(e.g., Morgan, 2002; Lundgren and McMakin, 2013).

The language of risk has a crucial role in framing and belief. Section

2.1.2 described over-arching and climate-specific definitions but risk

enters into almost every aspect of social discourse, so is relevant to how

risk is framed and communicated (e.g., Hansson, 2004). Meanings of

risk range from its ordinary use in everyday language to power and

political discourse, health, emergency, disaster, and seeking benefits,

ranging from specific local meanings to broad-ranging concepts such

as the risk society (Beck and Ritter, 1992; Beck, 2000; Giddens, 2000).

Complex framings in the word risk (Fillmore and Atkins, 1992; Hamilton

et al., 2007) feature in general English as both a noun and a verb,

reflecting harm and chance with negative and positive senses (Fillmore

and Atkins, 1992). Problem analysis applies risk as a noun (at-risk),

whereas risk management applies risk as a verb (to-risk) (Jones, 2011).

For simple risks, this transition is straightforward because of agreement

around values and agency (Figure 2-2). In complex situations, risk as a

problem and as an opportunity can compete with each other, and if

socially amplified can lead to action paralysis (Renn, 2011). For example,

unfamiliar adaptation options that seem to be risky themselves will force

a comparison between the risk of maladaptation and future climate risks,

echoing the risk trap where problems and solutions come into conflict

(Beck, 2000). Fear-based dialogs in certain circumstances can cause

disengagement (O’Neill and Nicholson-Cole, 2009), by emphasizing risk

aversion. Young (2013) proposes framing adaptation as a solution to

overcome the limitations of framing through the problem, and links it to

innovation, which provides established pathways for the implementation

of actions, proposing a problem-solution framework linking decision

making to action. Framing decisions and modeling actions on positive risk-

seeking behavior can help people to address uncertainty as opportunity

(e.g., Keeney, 1992).

N

arratives are accounts of events with temporal or causal coherence

that may be goal directed (László and Ehmann, 2012) and play a key

role in communication, learning, and understanding. They operate at

the personal to societal scales, are key determinants of framing, and

have a strong role in creating social legitimacy. Narratives can also be

non-verbal: visualization, kinetic learning by doing, and other sensory

applications can be used to communicate science and art and to enable

learning through play (Perlovsky, 2009; Radford, 2009). Narratives of

climate change have evolved over time and invariably represent

uncertainty and risk (Hamblyn, 2009) being characterized as tools for

analysis, communication, and engagement (Cohen, 2011; Jones et al.,

2013; Westerhoff and Robinson, 2013) by:

• Providing a social and environmental context to modelled futures

(Arnell et al., 2004; Kriegler et al., 2012; O’Neill et al., 2014), by

describing aspects of change that drive or shape those futures as

part of scenario construction (Cork et al., 2012).

• Communicating knowledge and ideas to increase understanding

and increase agency framing it in ways so that actions can be

implemented (Juhola et al., 2011) or provide a broader socio-

ecological context to specific knowledge (Burley et al., 2012). These

narratives bridge the route between scientific knowledge and local

understandings of adaptation, often by working with multiple

actors in order to creatively explore and develop collaborative

potential solutions (Turner and Clifton, 2009; Paton and Fairbairn-

Dunlop, 2010; Tschakert and Dietrich, 2010).

• Exploring responses at an individual/institutional level to an aspect

of adaptation, and communicating that experience with others

(Bravo, 2009; Cohen, 2011). For example, a community that believes

itself to be resilient and self-reliant is more likely to respond

proactively, contrasted to a community that believes itself to be

vulnerable (Farbotko and Lazrus, 2012). Bravo (2009) maintains that

narratives of catastrophic risk and vulnerability demotivate

indigenous peoples whereas narratives combining scientific

knowledge and active citizenship promote resilience (Section2.5.2).

2.2.1.4. Ethics

Climate ethics can be used to formalize objectives, values (Section

2.2.1.1), rights, and needs into decisions, decision-making processes,

and actions (see also Section 16.7). Principal ethical concerns include

intergenerational equity; distributional issues; the role of uncertainty in

allocating fairness or equity; economic and policy decisions; international

justice and law; voluntary and involuntary levels of risk; cross-cultural

relations; and human relationships with nature, technology, and the

sociocultural world. Climate change ethics have been developing over

the last 20 years (Jamieson, 1992, 1996; Gardiner, 2004; Gardiner et al.,

2010), resulting in a substantial literature (Garvey, 2008; Harris, 2010;

O’Brien et al., 2010; Arnold, 2011; Brown, 2012; Thompson and Bendik-

Keymer, 2012). Equity, inequity, and responsibility are fundamental

concepts in the UNFCCC (UN, 1992) and therefore are important

considerations in policy development for CIAV. Climate ethics examine

effective responsible and “moral” decision making and action, not only

by governments but also by individuals (Garvey, 2008).

An important discourse on equity is that industrialized countries have,

through their historical emissions, created a natural debt (Green and

206

Chapter 2 Foundations for Decision Making

2

S

mith, 2002). Developing nations experience this debt through higher

impacts and greater vulnerability combined with limited adaptive

capacity. Regional inequity is also of concern (Green and Smith, 2002),

particularly indigenous or marginalized populations exposed to current

climate extremes, who may become more vulnerable under a changing

climate (Tsosie, 2007; see also Section 12.3.3). With respect to adaptation

assessment, cost-benefit or cost-effectiveness methods combined with

transfer of funds will not satisfy equity considerations (Broome, 2008;

see also Section 17.3.1.4) and modifications such as equity-weighting

(Kuik et al., 2008) and cost-benefit under uncertainty (Section 17.3.2.1),

have not been widely used. Adaptation measures need to be evaluated

by considering their equity implications (Section 17.3.1.4) especially

under uncertainty (Hansson, 2004).

Intergenerational issues are frequently treated as an economic problem,

with efforts to address them through an ethical framework proving to

be controversial (Nordhaus, 2007; Stern and Treasury of Great Britain,

2007; Stern, 2008). However, future harm may make the lives of future

generations difficult or impossible, dilemmas that involve ethical choices

(Broome, 2008), therefore discount rates matter (Section 17.4.4.4).

Some authors question whether the rights and interests of future people

should even be subject to a positive discount rate (Caney, 2009). Future

generations can neither defend themselves within current economic

frameworks (Gardiner, 2011) nor can these frameworks properly account

for the dangers, interdependency, and uncertainty under climate change

(Nelson, 2011), even though people’s values may change over time

(Section 16.7). The limits to adaptation raise questions of irreversible

loss and the loss of unique cultural values that cannot necessarily be

easily transferred (Section 16.7), contributing to key vulnerabilities and

informing ethical issues facing mitigation (see Section 19.7.1).

Environmental ethics considers the decisions humans may make

concerning a range of biotic impacts (Schalow, 2000; Minteer and Collins,

2010; Nanda, 2012; Thompson and Bendik-Keymer, 2012). Intervention

in natural systems through “assisted colonization” or “managed

relocation” raises important ethical and policy questions (Minteer and

Collins, 2010; Section 4.4.2.4) that include the risk of unintended

consequences (Section 4.4.4). Various claims are made for a more

pragmatic ethics of ecological decision making (Minteer and Collins,

2010), consideration of moral duties toward species (Sandler, 2009),

and ethically explicit and defendable decision making (Minteer and

Collins, 2005a,b).

Cosmopolitan ethics and global justice can lead to successful adaptation

and sustainability (Caney, 2006; Harris, 2010) and support collective

decision making on public matters through voting procedures (Held,

2004). Ethics also concerns the conduct and application of research,

especially research involving stakeholders. Action-based and participatory

research requires that a range of ethical guidelines be followed, taking

consideration of the rights of stakeholders, respect for cultural and

practical knowledge, confidentiality, dissemination of results, and

development of intellectual property (Macaulay et al., 1999; Kindon et

al., 2007; Daniell et al., 2009; Pearce et al., 2009). Ethical agreements

and processes are an essential part of participatory research, whether

taking part as behavioral change processes promoting adaptation or

projects of collaborative discovery (high confidence). Although the climate

change ethics literature is rapidly developing, the related practice of

d

ecision making and implementation needs further development.

Ethical and equity issues are discussed in WGIII AR5 Chapter 3.

2.2.2. Institutional Context

2.2.2.1. Institutions

Institutions are rules and norms held in common by social actors that

guide, constrain, and shape human interaction (North, 1990; Glossary).

Institutions can be formal, such as laws and policies, or informal, such as

norms and conventions. Organizations—such as parliaments, regulatory

agencies, private firms, and community bodies—develop and act in

response to institutional frameworks and the incentives they frame

(Young et al., 2008). Institutions can guide, constrain, and shape human

interaction through direct control, through incentives, and through

processes of socialization (Glossary). Virtually all CIAV decisions will be

made by or influenced by institutions because they shape the choices

made by both individuals and organizations (Bedsworth and Hanak,

2012). Institutional linkages are important for adaptation in complex

and multi-layered social and biophysical systems such as coastal areas

(Section 5.5.3.2) and urban systems (Section 8.4.3.4), and are vital in

managing health (Section 11.6), human security (Sections 12.5.1,

12.6.2), and poverty (Section 13.1). Institutional development and

interconnectedness are vital in mediating vulnerability in social-

ecological systems to changing climate risks, especially extremes

(Chapters 5, 7 to 9, 11 to 13).

The role of institutions as actors in adaptation are discussed in Section

14.4, in planning and implementing adaptation in Section 15.5, and in

providing barriers and opportunities in Section 16.3. Their roles can be

very diverse. Local institutions usually play important roles in accessing

resources and in structuring individual and collective responses

(Agarwal, 2010; see also Section 14.4.2) but Madzwamuse (2010) found

that in Africa, state-level actors had significantly more influence on formal

adaptation policies than did civil society and local communities.

This suggests a need for greater integration and cooperation among

institutions of all levels (Section 15.5.1.2). Section 14.2.3 identifies four

institutional design issues: flexibility; potential for integration into

existing policy plans and programs; communication, coordination, and

cooperation; and the ability to engage with multiple stakeholders.

Institutions are instrumental in facilitating adaptive capacity, by utilizing

characteristics such as variety, learning capacity, room for autonomous

change, leadership, availability of resources, and fair governance (Gupta

et al., 2008). They play a key role in mediating the transformation of

coping capacity into adaptive capacity and in linking short and long-

term responses to climate change and variability (Berman et al., 2012).

Most developing countries have weaker institutions that are less capable

of managing extreme events, increasing vulnerability to disasters (Lateef,

2009; Biesbroek et al., 2013). Countries with strong functional institutions

are generally assumed to have a greater capacity to adapt to current

and future disasters. However, Hurricane Katrina of 2005 in the USA and

the European heat wave of 2003 demonstrate that strong institutions

and other determinants of adaptive capacity do not necessarily reduce

vulnerability if these attributes are not translated to actions (IPCC,

2007a; see also Box 2-1, Section 2.4.2.2).

207

Foundations for Decision Making Chapter 2

2

T

o facilitate adaptation under uncertainty, institutions need to be

flexible enough to accommodate adaptive management processes such

as evaluation, learning, and refinement (Agarwal, 2010; Gupta et al.,

2010; see also Section 14.2.3). Organizational learning can lead to

significant change in organizations’ purpose and function (Bartley,

2007), for example, where non-governmental organizations have moved

from advocacy to program delivery with local stakeholders (Ziervogel

and Zermoglio, 2009; Kolk and Pinkse, 2010; Worthington and Pipa,

2010).

Boundary organizations are increasingly being recognized as important

to CIAV decision support (Guston, 2001; Cash et al., 2003; McNie, 2007;

Vogel et al., 2007). A boundary organization is a bridging institution, social

arrangement, or network that acts as an intermediary between science

and policy (Glossary). Its functions include facilitating communication

between researchers and stakeholders, translating science and technical

information, and mediating between different views of how to interpret

that information. It will also recognize the importance of location-specific

contexts (Ruttan et al., 1994); provide a forum in which information can

be co-created by interested parties (Cash et al., 2003); and develop

boundary objects, such as scenarios, narratives, and model-based decision

support systems (White et al., 2010). Adaptive and inclusive management

practices are considered to be essential, particularly in addressing wicked

problems such as climate change (Batie, 2008). Boundary organizations

also link adaptation to other processes managing global change and

sustainable development.

Boundary organizations already contributing to regional CIAV assessments

include the Great Lakes Integrated Sciences and Assessments Center in

the USA (GLISA; http://www.glisa.umich.edu/); part of the Regional

Integrated Sciences and Assessments Program of the U.S. government

(RISA; Pulwarty et al., 2009); the UK Climate Impacts Program (UKCIP;

UK Climate Impacts Program, 2011); the Alliance for Global Water

Adaptation (AGWA; http://alliance4water.org); and institutions working

on water issues in the USA, Mexico, and Brazil (Kirchhoff et al., 2012;

Varady et al., 2012).

2.2.2.2. Governance

Effective climate change governance is important for both adaptation

and mitigation and is increasingly being seen as a key element of risk

management (high confidence) (Renn, 2008; Renn et al., 2011). Some

analysts propose that governance of adaptation requires knowledge of

anticipated regional and local impacts of climate change in a more

traditional planning approach (e.g., Meadowcroft, 2009), whereas

others propose governance consistent with sustainable development

and resilient systems (Adger, 2006; Nelson et al., 2007; Meuleman and

in ’t Veld, 2010). Quay (2010) proposes “anticipatory governance”—a

flexible decision framework based on robustness and learning (Sections

2.3.3, 2.3.4). Institutional decisions about climate adaptation are taking

place within a multi-level governance system (Rosenau, 2005; Kern and

Alber, 2008). Multi-level governance could be a barrier for successful

adaptation if there is insufficient coordination as it comprises different

regulatory, legal, and institutional systems (Section 16.3.1.4), but is

required to manage the “adaptation paradox” (local solutions to a global

problem), unclear ownership of risks and the adaptation bottleneck

l

inked to difficulties with implementation (Section 14.5.3). Lack of

horizontal and vertical integration between organizations and policies

leads to insufficient risk governance in complex social-ecological

systems such as coasts (Section 5.5.3.2) and urban areas (Section 8.4),

including in the management of compound risks (Section 19.3.2.4).

Legal and regulatory frameworks are important institutional components

of overall governance, but will be challenged by the pervasive nature

of climate risks (high confidence) (Craig, 2010; Ruhl, 2010a,b). Changes

proposed to manage these risks better under uncertainty include

integration between different areas of law, jurisdictions and scale,

changes to property rights, greater flexibility with respect to adaptive

management, and a focus on ecological processes rather than

preservation (Craig, 2010; Ruhl, 2010a; Abel et al., 2011; Macintosh et

al., 2013). Human security in this report is not seen just as an issue of

rights (Box 12-1), given that a minimum set of universal rights exists

(though not always exercised), but is instead assessed as being subject

to a wide range of forces. Internationally, sea level rise could alter the

maritime boundaries of many nations that may lead to new claims by

affected nations or loss of sovereignty (Barnett and Adger, 2003). New

shipping routes, such as the North West Passage, will be opened up by

losses in Arctic sea ice (Sections 6.4.1.6, 28.2.6). Many national and

international legal institutions and instruments need to be updated to

face climate-related challenges and decision implementation (medium

confidence) (Verschuuren, 2013).

2.3. Methods, Tools, and Processes

for Climate-related Decisions

This section deals with methods, tools, and processes that deal with

uncertainties (Section 2.3.1); describes scenarios (Section 2.3.2); covers

trade-offs and multi-metric valuation (Section 2.3.3); and reviews

learning and reframing (Section 2.3.4).

2.3.1. Treatment of Uncertainties

Most advice on uncertainty, including the latest guidance from the IPCC

(Mastrandrea et al., 2010; see also Section 1.1.2.2), deals with

uncertainty in scientific findings and to a lesser extent confidence.

Although this is important, uncertainty can invade all aspects of decision

making, especially in complex situations. Whether embodied in formal

analyses or in the training and habits of decision makers, applied

management is often needed because unaided human reasoning can

produce mismatches between actions and goals (Kahneman, 2011). A

useful high-level distinction is between ontological uncertainty—what

we know—and epistemological uncertainty—how different areas of

knowledge and “knowing” combine in decision making (van Asselt and

Rotmans, 2002; Walker et al., 2003). Two other areas of relevance are

ambiguity (Brugnach et al., 2008) and contestedness (Klinke and Renn,

2002; Dewulf et al., 2005), commonly encountered in wicked problems/

systemic risks (Renn and Klinke, 2004; Renn et al., 2011).

Much of this uncertainty can be managed through framing and decision

processes. For example, a predict-then-act framing is different to an

assess-risk-of-policy framing (SREX Section 6.3.1 and Figure 6.2; Lempert

208

Chapter 2 Foundations for Decision Making

2

e

t al., 2004). In the former, also known as “top-down,” model or

impacts-first, science-first, or standard approach, climate or impact

uncertainty is described independently of other parts of the decision

problem. For instance, probabilistic climate projections (see Figure 21-4

or WGI AR5 Chapters 11 and 12; Murphy et al., 2009) are generated for

wide application, and thus are not tied to any specific choice. This follows

the cause and effect model described in Section 2.1. The basic structure

of IPCC Assessment Reports follows this pattern, with WGI laying out

what is known and uncertain about current and future changes to the

climate system. Working Groups II and III then describe impacts resulting

from and potential policy responses to those changes (Jones and Preston,

2011).

In contrast, the “assess-risk-of-policy” framing (Lempert et al., 2004;

UNDP, 2005; Carter et al., 2007; Dessai and Hulme, 2007) starts with the

decision-making context. This framing is also known as “context-first”

(Ranger et al., 2010); “decision scaling” (Brown et al., 2011); “bottom-

up”; vulnerability, tipping point (Kwadijk et al., 2010); critical threshold

(Jones, 2001); or policy-first approaches (SREX Section 6.3.1). In engaging

with decision makers, the “assess-risk-of-policy” approach often requires

information providers work closely with decision makers to understand

their plans and goals, before customizing the uncertainty description to

focus on those key factors. This can be very effective, but often needs

to be individually customized for each decision context (Lempert and

Kalra, 2011; Lempert, 2012) requiring collaboration between researchers

and users (see Box 2-1). A “predict-then-act” framing is appropriate

when uncertainties are shallow, but when uncertainties are deep, an

“assess-risk-of-policy” framing is more suitable (Dessai et al., 2009).

The largest focus on uncertainty in CIAV has been on estimating climate

impacts such as streamflow or agricultural yield changes and their

consequent risks. Since AR4, the treatment of these uncertainties has

advanced considerably. For example, multiple models of crop responses

to climate change have been compared to estimate inter-model

uncertainty (Asseng et al., 2013). Although many impact studies still

characterize uncertainty by using a few climate scenarios, there is a

growing literature that uses many climate realizations and also assesses

uncertainty in the impact model itself (Wilby and Harris, 2006; New et

al., 2007). Some studies propagate uncertainties to evaluate adaptation

options locally (Dessai and Hulme, 2007) by assessing the robustness

of a water company’s plan to climate change uncertainties or regionally

(Lobell et al., 2008) by identifying which regions are most in need of

adaptation to food security under a changing climate. Alternatively, the

critical threshold approach, where the likelihood of a given criterion can

be assessed as a function of climate change, is much less sensitive to

input uncertainties than assessments estimating the “most likely”

outcome (Jones, 2010). This is one of the mainstays of robustness

assessment discussed in Section 2.3.3.

2.3.2. Scenarios

A scenario is a story or image that describes a potential future, developed

to inform decision making under uncertainty (Section 1.1.3). A scenario

is not a prediction of what the future will be but rather a description of

how the future might unfold (Jäger et al., 2008). Scenario use in the

CIAV research area has expanded significantly beyond climate into

b

roader socioeconomic areas as it has become more mainstream (high